An IEEE 754 binary floating-point number is a number that can be represented in normalized binary scientific notation. This is a number like 1.00000110001001001101111 x 2-10, which has two parts: a significand, which contains the significant digits of the number, and a power of two, which places the “floating” radix point. For this example, the power of two turns the shorthand 1.00000110001001001101111 x 2-10 into this ‘longhand’ binary representation: 0.000000000100000110001001001101111.

The significands of IEEE binary floating-point numbers have a limited number of bits, called the precision; single-precision has 24 bits, and double-precision has 53 bits. The range of power of two exponents is also limited: the exponents in single-precision range from -126 to 127; the exponents in double-precision range from -1022 to 1023. (The example above is a single-precision number.)

Limited precision makes binary floating-point numbers discontinuous; there are gaps between them. Precision determines the number of gaps, and precision and exponent together determine the size of the gaps. Gap size is the same between consecutive powers of two, but is different for every consecutive pair.

The spacing explains many properties of floating-point numbers, like why conversions between binary and decimal floating-point numbers round-trip only under certain conditions, and why numbers of wildly different magnitudes don’t mix.

Spacing In a Toy Floating-Point Number System

Let’s look at the spacing of numbers in a “toy” floating-point number system, one with four bits of precision and an exponent range of -1 to 1. (I will only be discussing positive numbers; negative numbers have the same spacing, but as the mirror image.) With four bits of precision, there are eight 4-bit significands (they are normalized, so they have to start with ‘1’): 1.000, 1.001, 1.010, 1.011, 1.100, 1.101, 1.110, 1.111. Combined with the three exponents, there are 24 numbers this system describes:

| Scientific Notation | Binary | Decimal |

|---|---|---|

| 1.000 x 2-1 | 0.1 | 0.5 |

| 1.001 x 2-1 | 0.1001 | 0.5625 |

| 1.010 x 2-1 | 0.101 | 0.625 |

| 1.011 x 2-1 | 0.1011 | 0.6875 |

| 1.100 x 2-1 | 0.11 | 0.75 |

| 1.101 x 2-1 | 0.1101 | 0.8125 |

| 1.110 x 2-1 | 0.111 | 0.875 |

| 1.111 x 2-1 | 0.1111 | 0.9375 |

| 1.000 x 20 | 1 | 1 |

| 1.001 x 20 | 1.001 | 1.125 |

| 1.010 x 20 | 1.01 | 1.25 |

| 1.011 x 20 | 1.011 | 1.375 |

| 1.100 x 20 | 1.1 | 1.5 |

| 1.101 x 20 | 1.101 | 1.625 |

| 1.110 x 20 | 1.11 | 1.75 |

| 1.111 x 20 | 1.111 | 1.875 |

| 1.000 x 21 | 10 | 2 |

| 1.001 x 21 | 10.01 | 2.25 |

| 1.010 x 21 | 10.1 | 2.5 |

| 1.011 x 21 | 10.11 | 2.75 |

| 1.100 x 21 | 11 | 3 |

| 1.101 x 21 | 11.01 | 3.25 |

| 1.110 x 21 | 11.1 | 3.5 |

| 1.111 x 21 | 11.11 | 3.75 |

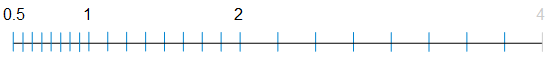

The numbers are written in order of magnitude, so they increase by one ULP from one row to the next. (Gap is synonymous with ULP; I use gap when my focus is on spacing, and I use ULP when my focus is on digits.) You get a better feel for spacing differences among intervals when you see the numbers written out longhand. Better still is a picture:

You can see that the smaller numbers have smaller gaps between them, and the larger numbers have larger gaps between them. Let’s look closer at how the number and size of these gaps is determined.

Gap Count

Gap count is determined by precision, so let’s start by counting the significands for each increment of precision:

- 1 bit of precision: There is one 1-bit significand: 1

- 2 bits of precision: There are two 2-bit significands: 1.0, 1.1

- 3 bits of precision: There are four 3-bit significands: 1.00, 1.01, 1.10, 1.11

- 4 bits of precision: There are eight 4-bit significands: 1.000, 1.001, 1.010, 1.011, 1.100, 1.101, 1.110, 1.111

- …

From the pattern, you can see that there are 2p-1 p-bit significands. More formally, think of the significands as integers (disregard the radix point). All p-bit integers lie in the interval [2p-1,2p), so there are 2p – 2p-1 = 2p-1 of them.

Another way to think of it is this: since the leading bit is “stuck” at 1, there are only p-1 bits left to vary, and thus 2p-1 combinations. The leading ‘1’ bit sets the power of two interval, and the p-1 fractional bits carve it up.

The number of significands is the same as the number of gaps, since there is a gap from the highest significand to the next power of two.

Gap count = 2p-1

If we go from precision p to precision p+1, we’ll have 2p gaps. That is, the number of gaps doubles.

Gap Size

All binary floating-point numbers with power of two exponent e are in the interval [2e,2e+1). (The interval includes 2e but excludes 2e+1.) The length of this interval is 2e+1 – 2e = 2e.

Each interval has the same number of equal-sized gaps, which is determined by the precision p. The size of each gap in each interval is the length of the interval divided by the number of gaps: 2e/2p-1 = 2e-(p-1) = 2e+1-p.

Gap size = 2e+1-p

Each gap is a power of two in size, and depends on the exponent and precision. With each increment of exponent, gap size doubles; with each increment of precision, gap size halves. So if you increment the exponent and precision at the same time (or if you decrement both at the same time), the gap size will remain the same.

You can think of the 1-p part of the formula as shifting the exponent from the highest bit of the significand to the lowest bit. The lowest bit represents one ULP, and its place value is the gap size.

Let’s see how the spacing in our toy system changes as we go from 1 to 4 bits of precision, for exponents, -1, 0, and 1:

Spacing With Increased Precision and Range

Moving beyond our toy system, let’s see how the spacing changes with increased precision and range.

Spacing Between Nonnegative Powers of Two

This table shows the gap counts and gap sizes between nonnegative powers of two, for various combinations of precision and exponent. It shows the spacing in the toy system and beyond, including spacing in sample intervals of the IEEE single-precision and double-precision number systems:

| [1,2) | [2,4) | [4,8) | … | [2127,2128) | … | [21023,21024) | ||

|---|---|---|---|---|---|---|---|---|

| Bits | Count | Size | Size | Size | … | Size | … | Size |

| 1 | 1 | 1 | 2 | 4 | … | 2127 | … | 21023 |

| 2 | 2 | 0.5 | 1 | 2 | … | 2126 | … | 21022 |

| 3 | 4 | 0.25 | 0.5 | 1 | … | 2125 | … | 21021 |

| 4 | 8 | 0.125 | 0.25 | 0.5 | … | 2124 | … | 21020 |

| … | … | … | … | … | … | … | … | … |

| 24 | 223 | 2-23 | 2-22 | 2-21 | … | 2104 | … | 21000 |

| … | … | … | … | … | … | … | … | … |

| 53 | 252 | 2-52 | 2-51 | 2-50 | … | 275 | … | 2971 |

Integer Spacing

When the gap size is 1, all floating-point numbers in the interval are integers. For single-precision, this occurs at exponent 223, the interval [223,224). For double-precision, this occurs at exponent 252, the interval [252,253). (Those two ranges are not shown in the table.)

Those Gaps Get Huge

It’s easy to lose feel for the magnitude of these gaps. For example, in the neighborhood of 1e300, which in double-precision converts to 1.01111110010000111100100010000000000001110101100111 x 2996, the gap size is a whopping 2944. This explains why adding 1, 10000, or even

74350845423889153139903124907495028006563010082969722952788592965797032858020218677258415724807817529489936189509648652522729262277245285389541529055119731289148542022372993697134320491714886843511959789117571860303387435343894004407854947017432904150602255272707195641188267440734208

(that’s 2943) to 1e300 still gives you 1e300. (2943 is half the gap size, but the result rounds down to due round-half-to-even rounding.)

Spacing Between Nonpositive Powers of Two

This table shows the same information for nonpositive powers of two, covering the toy system as well as showing sample ranges in single and double-precision (the same formulas for gap count and gap size apply):

| [2-1022,2-1021) | … | [2-126,2-125) | … | [0.125,0.25) | [0.25,0.5) | [0.5,1) | ||

|---|---|---|---|---|---|---|---|---|

| Bits | Count | Size | … | Size | … | Size | Size | Size |

| 1 | 1 | 2-1022 | … | 2-126 | … | 0.125 | 0.25 | 0.5 |

| 2 | 2 | 2-1023 | … | 2-127 | … | 0.0625 | 0.125 | 0.25 |

| 3 | 4 | 2-1024 | … | 2-128 | … | 0.03125 | 0.0625 | 0.125 |

| 4 | 8 | 2-1025 | … | 2-129 | … | 0.015625 | 0.03125 | 0.0625 |

| … | … | … | … | … | … | … | … | … |

| 24 | 223 | 2-1045 | … | 2-149 | … | 2-26 | 2-25 | 2-24 |

| … | … | … | … | … | … | … | … | … |

| 53 | 252 | 2-1074 | … | 2-178 | … | 2-55 | 2-54 | 2-53 |

As you can see, for the extreme negative exponents, the gaps get very tiny.

Machine Epsilon

I highlighted two values in the first table; these are known as machine epsilon in IEEE binary floating-point. Machine epsilon is determined by the precision; it equals 21-p. For single-precision, it is 2-23; for double-precision, it is 2-52.

Machine epsilon is just the gap size in [1,2). You can see this by plugging e = 0 into the gap size formula. You can think of all gaps as power of two multiples of machine epsilon: 2e·21-p = 2e+1-p.

Subnormal Numbers

To this point we have ignored subnormal numbers, the floating-point numbers that fill the space between zero and the smallest normal floating-point number. The gaps in the subnormal range do not change size; they are equal, even though they effectively span a range of exponents.

Think of a subnormal number as normal number with less precision. For example, the subnormal single-precision number 0.0000001000101101100001 x 2-126 can be written as 1.000101101100001 x 2-133. This form shows the true exponent, which in turn shows the true precision; the exponent decreased by seven, which means the precision decreased by seven as well. For any subnormal number, exponent and precision decrease by the same amount, canceling out any change in gap size.

Use the gap size formula on any such “normalized subnormal” to compute the gap size for all subnormal numbers. In our example, the reduced precision is 17, and the normalized exponent is -133; this makes the gap size for subnormal single-precision numbers 2-149. Similarly, for double-precision, take any example and do the same: 0.0000000000000010111100100000000111010100100111111011 x 2-1022 normalizes to 1.0111100100000000111010100100111111011 x 2-1037, resulting in 38 bits of precision and thus a gap size of 2-1074.

Without subnormal numbers, there would be a relatively huge gap between 0 and the smallest normal number. For double-precision, that gap would be 2-1022. Instead, we carve it up into gaps of size 2-1074. These subnormal gaps are the same size as the gaps between the smallest normal numbers.

Double-precision subnormal numbers live in [2-1074,2-1022); single-precision subnormal numbers live in [2-149,2-126).

On The Term Binade

Many floating-point experts use the term binade to describe the interval [2e,2e+1). I have avoided that term in this article, mostly because I haven’t also been talking about decimal spacing (binade is the binary equivalent of decade), but partly because I don’t like it. (Maybe it will grow on me, when I become an expert 🙂 .) I use the term interval, like David Goldberg does.

References

These were my main online references:

- “Floating Point Representation and the IEEE Standard” by Michael L. Overton.

- “Floating points” by Cleve Moler. (He makes one mistake though: the spacing in [1,2) is 2-2, not 2-3.)

Regarding the word “binade”, here is a use in context:

— I can never remember which of “sqrt(x)*sqrt(x) == x” or “sqrt(x*x) == x” is true. It would be great if it’s the first one, because then no worries for overflows and underflows!

— Yes, it would be great, but out of an operation that maps one binade to two, and another that maps two binades to a single one, which do you want to have as the first of a sequence that is supposed to return x exactly?

The word “interval” in itself does not convey the notions that allow the mnemotechnic trick above.

Unless you redefine “interval” to mean “binade”, but that redefinition, unlike that of “mantissa”, would be terribly ambiguous considering how useful the original meaning is in the context of floating-point.

Pascal,

I think for my purposes here I can get away with “interval;” but I agree that it is a little fuzzy for real math work. When it comes time to talk about both decimal and binary gaps together, I am going to have to clarify the term. Will I say “binary interval;” and “decimal interval;”? I don’t know. I can probably make them work in context, but they are still fuzzy compared to binade and decade.

(Then again, I came to like the term bicimal, so go figure.)

Nice article. The number line for the toy FP system was particularly useful.

It would be even better if you could have added denormalised numbers to your toy system to show how they work, and the way they would fill in the gap between 0 and 0.5.

@Paul,

Thanks. I didn’t want to put subnormals into the picture too early, but I suppose I could have put an updated version of the diagram into the subnormal section.

It’ll be great if you could add denormalized numbers as well to the toy system.

And thanks for such a neat explanation on floating point numbers. I haven’t come across an explanation neater than this.

@Shiva S,

Subnormal numbers (the preferred term to “denormal numbers”) would be easy enough to work in. Between 0 and 2-1 (0.5) there would be 8 gaps of size 2-4.

(Thanks for the compliment.)