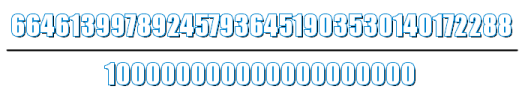

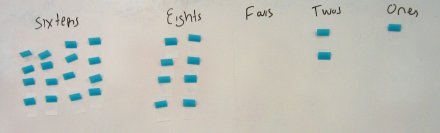

In my article “Using Integers to Check a Floating-Point Approximation,” I briefly mentioned “bigcomp,” an optimization strtod() uses to reduce big integer overhead when checking long decimal inputs. bigcomp does a floating-point to decimal conversion — right in the middle of a decimal to floating-point conversion mind you — to generate the decimal expansion of the number halfway between two target floating-point numbers. This decimal expansion is compared to the input decimal string, and the result of the comparison dictates which of the two target numbers is the correctly rounded result.

In this article, I’ll explain how bigcomp works, and when it applies. Also, I’ll talk briefly about its performance; my informal testing shows that, under the default setting, bigcomp actually worsens performance for some inputs.

Continue reading “Bigcomp: Deciding Truncated, Near Halfway Conversions”