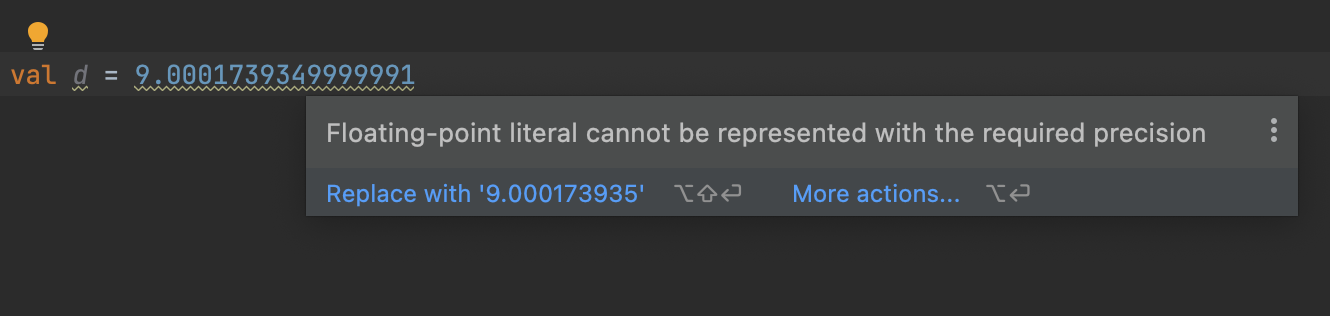

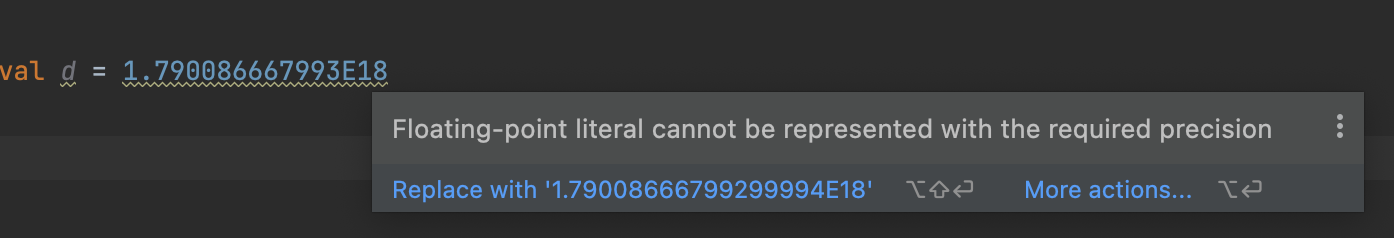

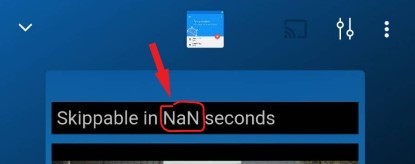

I was testing David Gay’s most recent fixes to strtod() with different rounding modes and discovered that Apple Clang C++ (Xcode) and Microsoft Visual Studio C++ produce incorrect results for round towards zero and round down modes: their strtod()s convert numbers greater than DBL_MAX to infinity, not DBL_MAX. At first I thought Gay’s strtod() was wrong, but Dave pointed out that the IEEE 754 spec requires such conversions to be monotonic.

Continue reading “Numbers Greater Than DBL_MAX Should Convert To DBL_MAX When Rounding Toward Zero”